Lo, this only have I found, that God hath made man upright; but they have sought out many inventions.

—Ecclesiastes, 250 BCGreeks Bearing Gifts

From Antikythera to AI—tracking the labyrinthine path of technology’s progress.

By Simon Winchester

Yahagi Bridge at Okazaki on the Tokaido, by Hokusai, c. 1834. The Metropolitan Museum of Art, H.O. Havemeyer Collection, bequest of Mrs. H.O. Havemeyer, 1929.

Audio brought to you by Curio, a Lapham’s Quarterly partner

All of our exalted technological progress, civilization for that matter, is comparable to an ax in the hand of a pathological criminal.

—Albert Einstein, 1917

This issue of Lapham’s Quarterly prompts the questions of mankind’s relation to the machine—best friend or worst enemy, saving grace or engine of doom? Such questions have been with us since James Watt’s introduction of the first efficient steam engine in the same year that Thomas Jefferson wrote the Declaration of Independence.

But lately they have been fortified with the not entirely fanciful notion that machine-made intelligence, now freed like a genie from its bottle, and still writhing and squirming through its birth pangs, will soon begin to grow phenomenally, will assume the role of some fiendish Rex Imperator, and will ultimately come to rule us all, for always, and with no evident existential benefit to the sorry and vulnerable weakness that is humankind.

Is such a worry justified? Do periods of rapid technological advance necessarily bring with them accelerating fears of the future? It was not always so, especially during the headier days of scientific progress. The introduction of the railway train did not necessarily lead to the proximate fear that it might kill a man. When a locomotive lethally injured William Huskisson on the afternoon of September 15, 1830, there was such giddy excitement in the air that an accident seemed quite out of the question.

The tragedy was caused by the Rocket, newfangled and terrifically noisy, breathing fire, gushing steam, and clanking metal, an iron horse in full gallop. But when it knocked the poor man down—elevating a British government minister of only passing distinction to the indelible status of being the first-ever victim of a moving train—the event was occasioned precisely because the very idea of steam-powered locomotion was so new. The thing was so unexpected and preposterous, the engine’s sudden arrival on the newly built track linking Manchester to Liverpool so utterly unanticipated, that we can reasonably conclude that Huskisson, who had incautiously stepped down from his assigned carriage to see a friend in another, was killed by nothing less than technological stealth. Early science, imbued with optimism and hubris, seldom supposed that any ill might come about.

Which seems so often to have been the way with the new, with mishaps being so frequently the inadvertent handmaiden of invention. Would-be astronauts burn up in their capsule while still on the ground; rubber rings stiffen in an unseasonable Florida cold and cause a spaceship to explode; an algorithm misses a line of code, and a million doses of vaccine go astray; where once a housefly might land amusingly on a bowl of soup, now a steel cog is discovered deep within a factory-made apple pie. Such perceived shortcomings of technology inevitably provide rich fodder for a public still curiously keen to scatter schadenfreude where it may, especially when anything new appears to etch a blip onto their comfortingly and familiarly flat horizon.

Though technology as a concept traces back to classical times, when it described the systematic treatment of knowledge in its broadest sense, its more contemporary usage relates almost wholly either to the mechanical, the electronic, or the atomic. And though the consequences of all three have been broad, ubiquitous, and profound, we have really had little enough time to consider them thoroughly. The sheer newness of the thing intrigued Antoine de Saint-Exupéry when he first considered the sleekly mammalian curves of a well-made airplane. We have just three centuries of experience with the mechanical new and even less time—under a century—fully to consider the accumulating benefits and disbenefits of its electronic and nuclear kinsmen. Except, though, for one curious outlier, which predates all and puzzles us still.

The Antikythera mechanism was a device dredged up from the Aegean in the net of a sponge diver a century ago. Once freed from the accumulations of submarine life, it was shown to be two thousand years old, thirty well-fashioned, hand-cut bronze gearwheels, each with notched teeth and turning handles and a plate inscribed with instructions in Koine Greek, originally fitted into a wooden box. This engine—the mechanism—appears to have been a hand-cranked computational machine, greater in ambition than in accuracy maybe, but which could predict the movements of such astronomical bodies as were then known to those sky watchers who gazed from the headlands of the Peloponnese.

Fighting a Fire, by William P. Chappel, c. 1875. The Metropolitan Museum of Art, Edward W.C. Arnold Collection of New York Prints, Maps, and Pictures, bequest of Edward W.C. Arnold, 1954.

Whatever technical wonders might later be created in the Industrial Revolution, the Greeks had an evident aptitude and appetite for mechanics, as

Plutarch reminds us. On the evidence of this one creation, this aptitude seems to have been as hardwired in them as was the fashioning of spearheads or wattle fences or stoneware pots to others in worlds and times beyond. While Pliny the Elder and Cicero mention similar technology—as does the Book of Tang, describing an eighth-century armillary sphere made in China—the Antikythera mechanism remains the one such device ever found. Little of an intricacy equal to it was to be made anywhere for the two thousand years following—a puzzle still unsolved. When mechanical invention did reemerge into the human mind, it did so in double-quick time and in a tide that has never since ceased its running—almost as if it had been pent up for two millennia or more.

Technology proper arrived in a three-step process, with further steps certainly still to come. The first step, the notion that some mechanical arrangement might be persuaded to perform useful physical work rather than mere astronomical cogitation, was born of one demonstrable and inalienable fact: water heated to its boiling point transmutes into a gaseous state, steam, which occupies a volume fully 1,700 times greater than its liquid origin.

It took a raft of eighteenth-century British would-be engineers to apprehend that great use could somehow be made of this property. It eventually fell to the Scotsman James Watt to perfect, construct, and try out in the intricate arrangement of pistons and exactly machined cylinders and armatures that could efficiently undertake steam-powered tasks. Afterward only the cleverness of mechanical engineers (the term entering the English language in tandem with the invention) could determine exactly how the immense potential power of a steam engine might best be employed. The Industrial Revolution is thus wholly indebted to Watt: no more seminal a device than his engine and its countless derivatives would be born for almost two centuries.

That first leap alone was enough to offer up the full spectrum of reactions to technology’s perceived benefit and cost. The advantages were obvious, somewhat anticipated, and often phenomenal: steam-powered factories were thrown up, manufacturing was inaugurated,

Thomas Carlyle wrote somewhat sardonically of the Age of Machinery. New cities were born, populations were moved, demographics shifted, old cities changed their shapes and swelled prodigiously, entrepreneurs abounded, fortunes were accumulated, physical sciences became newly valued, new materials were born, fresh patterns of demand were created, new trade routes were established to meet them, empires altered their size and configurations, competition accelerated, advertising was inaugurated, a world was swiftly awash with products all made to greater or lesser extent from machinery powered by steam.

And yet. Among the more swiftly apparent of the revolution’s disbenefits was the matter of the environment: the sudden change in the color of the industrial sky and the change in quality of the industrial rivers and lakes; the immense gatherings of fuel (coal, mainly) that were needed to heat the engines’ water so as to alter its phase and make it perform its duties spewed carbon pollutants all around, ruining arcadia forever. Satanic mills were born. Dirt was everywhere. Gustave Doré painted it. Charles Dickens and Henry Mayhew wrote about it.

Other effects were less overt and more languid in their arrival. The Royal Navy factory built in the southern city of Portsmouth around the turn of the nineteenth century was the first in the world to harness steam power, for the making of a sailing vessel’s pulley blocks. Blocks had been used for centuries; Herodotus reminds us how blocks of much the same design could have been employed in the making of the pyramids of Khufu. But a fully furnished man-of-war might need fourteen hundred such devices—some small, enabling a sailor to raise and lower a signal flag from a yardarm, others massive confections of elm wood and iron and with sheaves of lignum vitae through which to pass the ropes, and which shipboard teams would use to haul up a five-ton anchor or a storm-drenched mainsail, with all the mechanical advantage that block-and-tackle arrangements have been known to offer since antiquity.

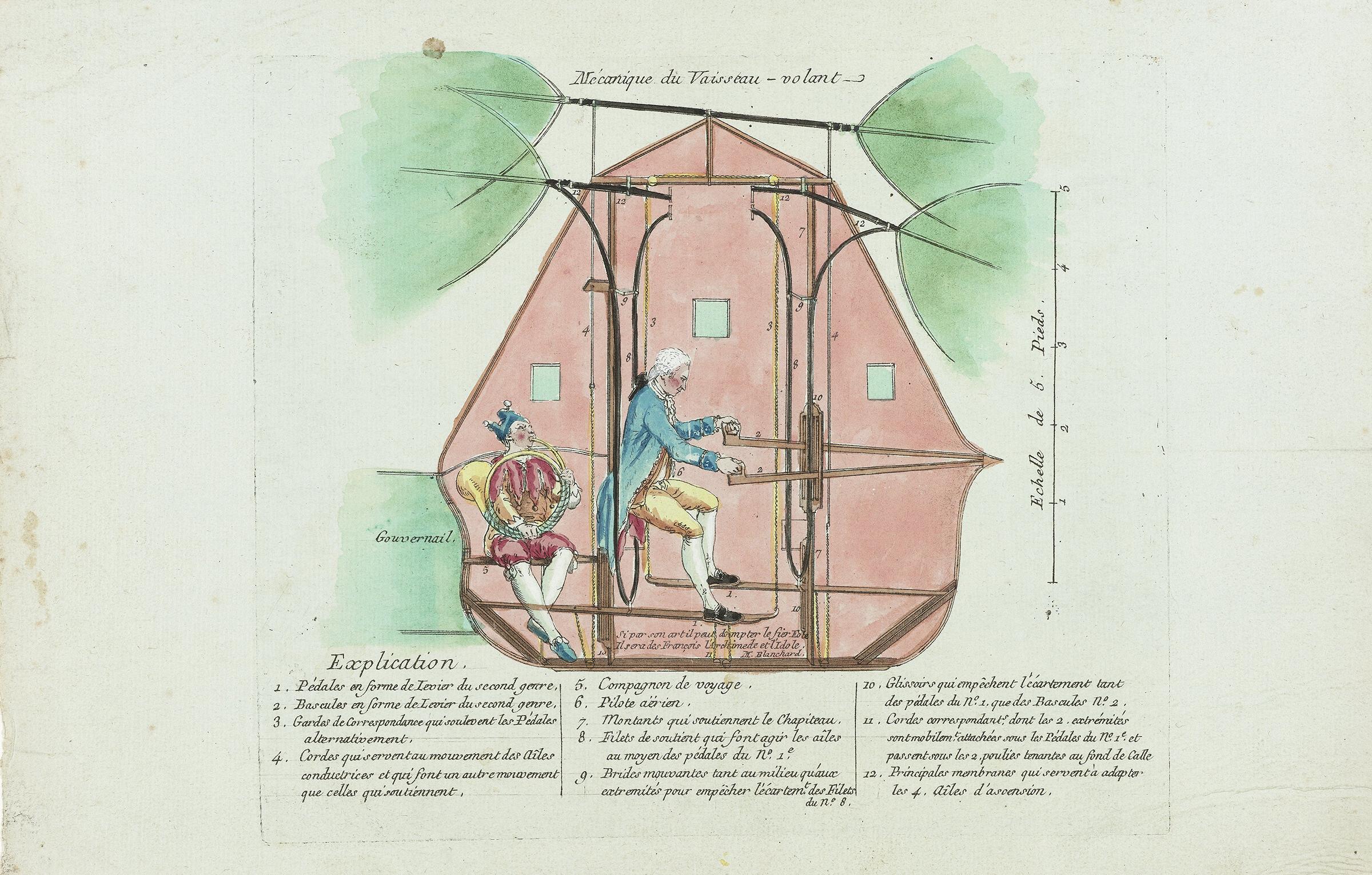

Diagram of French balloonist Jean-Pierre Blanchard’s “Flying Vessel” etching, c. 1781. Rijksmuseum, purchased with the support of the F.G. Waller-Fonds.

At the turn of the century, with Napoleon causing headaches for the British Admiralty worldwide, ever greater armadas were needed, ever more pulley blocks required, the want vastly outpacing the legions of cottage-bound carpenters who had previously fashioned them by hand, as they had done for centuries. The engineer Marc Isambard Brunel (father of the better-known bridge- and shipbuilder Isambard Kingdom Brunel) then reckoned that steam power could help make blocks more quickly and more accurately and in such numbers as the navy might ever need, and he hired Henry Maudslay, another engineer, to create machinery for the Portsmouth Block Mills to perform the necessary task. He calculated that just sixteen exactly defined steps were required to turn the trunk of an elm tree into a batch of pulley blocks able to withstand years of pounding by monstrous seas and ice storms and the rough handling of men and ships at battle stations.

The factory, six years in the making, proved a consummate success, a central component of Britain’s imperial expansion, a testament to the durability of well-made iron machinery, operating without complaint until the 1960s. Few men were needed to work inside the new plant, just those who kept the engine fueled and watered and a small corps of journeymen with oiled rags and clutches of cotton waste who lubricated the gear trains.

In scores of villages scattered across the southern English countryside, hundreds of carpenters whose livelihoods had entirely depended on the navy’s custom suddenly found themselves unneeded, superfluous, their once-essential skills rendered irrelevant. We know little of their individual fates; most probably they joined the hordes of other similarly displaced craftsmen compelled to head off to the cities to seek work. The English social order was thus subtly changed, and though neither Watt nor Maudslay nor any others gave more than scant attention to the consequences of their inventive energies—it would take figures like Lewis Mumford and

Thorstein Veblen and Marshall McLuhan to pursue such inquiry later on—there was a certain contemporary unsettling. Groups like the Luddites and the Chartists and even their predecessors the Diggers managed to whip up some fervor, for a while, and left a legacy of skepticism that remains simmering still today.

But the fork in the road had been seen, recognized, appreciated, and chosen. The Luddites were eventually disdained, the skeptics seen off, the path toward machine-made progress most decidedly taken. By the time of the Great Exhibition in London in 1851, it is fair to say that mechanical technology had in short order become widely accepted as a Good Thing.

If there was still any dithering, it took the manufacture of automobiles to consolidate the relationship between man and machinery. Eighty years on from Portsmouth, the Michigan-born

Henry Ford became the engineer who most keenly persuaded society, especially in America, to ratify the overwhelmingly beneficial role of technology. His intellectual rival at the time, Henry Royce—who, like Ford, was born in 1863 (though Royce in Huntingdonshire)—believed as Ford did in the future of the car. But the two men sported wildly different visions. Royce believed that craftsmen could transform the then crude internal combustion engines into marvels of precision and beauty, as perfect as skill and fine tools could make them; Ford, by contrast, believed that the motorcar might become a means of transport for the everyman, allowing the majesty of the country to be experienced by anyone with just a few hundred dollars to his name. He believed that inexpensive and interchangeable parts, and not too many of them, could be assembled on production lines to make millions of cars, for the benefit of all, while Royce employed discrete corps of craftsmen who painstakingly assembled the finest machines in the world, legends of precision and grace. History has judged Ford’s vision to be the more enduring, the model for the making of volumes of almost anything, mobile or not. This sequential system of mechanized or manual operations for the manufacture of products was to be the basis of what Tench Coxe had predicted a century and a half before: the American manufacturing system, soon to be dominant around the globe.

Yet the system of technology-based manufacturing turned out to have limits, which were imposed not as a consequence of any proximate disadvantages, social or otherwise, but by another unexpected factor: the physical limitations of materials. Engineering the alloys of steel and titanium and nickel and cobalt of which so much contemporary machinery is made, and under the commercial pressures that demanded ever greater efficiency—or profit, or speed, or power—has in recent times collided with harsh realities of metallurgy.

The near-fatal explosion of a port-side engine of a fully laden Qantas jetliner en route from Singapore to Sydney in November 2010 illustrates the point. Buried deep within the bowels of one of the Airbus’ four $13 million Rolls-Royce Trent 900 engines was a slender alloy tube, designed to supply lubricating oil to the red-hot high-pressure combustion chamber of what is still thought of as the most technologically advanced civilian jet engine ever made. The plane had already performed almost two thousand landing and takeoff cycles; for this flight it had flown from Los Angeles to Heathrow and from there to Singapore, and the engines had taken the customary punishment that attend such cycles and had emerged, as usual, unscathed.

’Tis the sport to have the engineer / Hoist with his own petard.

—William Shakespeare, 1600But five minutes after takeoff from Changi Airport, with all four engines spooled up to maximum power to bring the aircraft and its four hundred passengers up into the tropical sky, the tiny stub pipe in the number two port-side engine suddenly fractured and split, sending a spray of oil directly onto the red-hot spinning titanium turbine disk, flashing into fire and driving the temperature briefly well above the melting point of the disk itself. The disk began steadily to bend, then to wobble and break apart, and the turbine blades attached to it started to separate and were then flung out of the engine as shrapnel, tearing through the cowling, ripping the wing surfaces, breaking wires, severing conduits and pipes that carried hydraulic fluid, and in a trice rendering the left-hand side of the plane wholly inoperable.

The episode ended more or less happily: the plane eventually returned to the runway, and the startled passengers safely disembarked. But the subsequent investigation concluded with pitiless disinterest that this tiny metal pipe, one side of which may have been marginally mismachined but which had been inspected and passed into service, had failed, its structure suddenly unable to perform the duties assigned to it.

By contrast, technologies centered around solid-state printed circuitry suffer no metal fatigue, no friction losses, no wear. The idea of such circuitry was bruited in 1925 by a Leipzig engineer named Julius Lilienfeld, who filed a patent suggesting that a low-voltage electric current passing through a semiconducting substance like germanium, silicon, or gallium arsenide could be made to control a much larger voltage, to switch it on or off or amplify it, without the involvement of any moving part.

The idea took a generation to marinate in the minds of physicists around the world until shortly before Christmas 1947, when a trio of researchers at Bell Labs in New Jersey fashioned a working prototype of Lilienfeld’s idea and named the phenomenon it created the transistor effect. The invention of the first working transistor marked the moment when technology’s primal driving power switched from Newtonian mechanics to Einsteinian theoretics—unarguably the most profound development in the technological universe and the second of the three steps, or leaps, that have come to define this aspect of the modern human era.

Terracotta onos, a leg guard for use in carding wool, Greece, late sixth century bc. The Metropolitan Museum of Art, Rogers Fund, 1906.

The sublime simplicity of the transistor—its ability to switch electricity on or off, to be there or not, to be counted as 1 or 0, and thus to reside at, and essentially to be, the heart of all contemporary computer coding—renders it absolutely, totally, entirely essential to contemporary existence, in previously unimaginable numbers. The devices that populate the processors at the heart of almost all electronics, from cell phones and navigation devices and space shuttles to Alexas and Kindles and wristwatches and weather-forecasting supercomputers and, most significantly of all, to the functioning of the internet, are being manufactured at rates and in numbers that quite beggar belief: thirteen sextillion being the latest accepted figure, a number greater than the aggregated total of all the stars in the galaxy or the accretion of all the cells in a human body.

There are nearly twelve billion transistors in the central operating chip in an iPhone 12, crammed into a morsel of silicon measuring just ninety square millimeters. And yet in 1947, a single transistor was fully the size of an infant’s hand, with its inventors having no clear idea of the role its offspring would play in human society. The sheer proliferation of the device suggests the physical limitations of this kind of technology, rather than anything to do with the integrity of its materials, as was true back in the purely mechanical world. Instead the limitations have to do with the ever-diminishing size of the transistors themselves, with so many of them now forced into such tiny pieces of semiconducting real estate.

They are now made so small—made by machines created in the Netherlands that are so gigantic that several wide-bodied jets are needed to carry each one to the chip-fabrication plant—that they operate down on the atomic and near-subatomic levels, their molecules almost touching and in imminent danger of interfering with one another and causing atomic short circuits.

Mention of atomic-level interference brings to mind the third great leap that has been made by technology: the practical fissioning of an atom and the release of the energy that Albert Einstein had long before calculated resided within it. Leo Szilard famously imagined the theoretical possibility of a chain reaction in 1933, while he was waiting for a London traffic light to change. Enrico Fermi then created the first controlled fission reaction in the basement of a football stadium in Chicago in 1942, followed by the

first detonation of an uncontrolled fission device in the summer of 1945, and finally, in August of that year, the utter destruction of two Japanese cities, Hiroshima and Nagasaki, by the first uses of nuclear technology, employed as an American weapon of war. The atom bomb has not been used since—a measure, one hopes, of our acknowledgment of the terrible consequences of its use.

That the world’s sole nuclear attack was perpetrated on Japan, of all places, serves to expose one irony about technology’s current hold on humanity. Japan is a country much given to the early adoption and subsequent enthusiastic use of technology. Canon, Sony, Toshiba, Toyota are all companies that have flourished mightily since World War II making products, from cameras to cars, that are revered for their precision and exquisite functionality. Yet at the same time, the country has held in the highest esteem its own centuries-old homegrown traditions of craftsmanship—carpenters and knife grinders, temple builders and lacquerware makers, ceramicists and those who work in bamboo are held in equally high regard, with the finest in their field accorded government-backed status as “living national treasures,” with a pension and a standing that encourages others to follow in their footsteps.

In this Japan appears unique. While such places as Germany, the United States, Italy, and Britain currently bristle with engineers who also have designed and produced objects of great precision and value, paragons of technological achievement, they do not hold in especially high esteem those who carve and chamfer and polish and shape by hand. Savile Row and Rolls-Royce and their like are diminished names these days, the value of their cherished skills no longer so widely revered as once.

Not so in Japan. At the Seiko factory in northern Honshu, while robots assemble thousands of perfectly constructed quartz-watch movements each day on its production line, on the same factory floor a small army of middle-aged men and women assemble clockwork wristwatches entirely by hand—dealing in hairsprings and mainsprings, jeweled bearings and rouge-polished bezels, each meticulously finished watch a testament to loving craftsmanship and, not surprisingly, costing a small fortune to buy.

Japan, in short, seems by way of this and a host of similar examples to have struck a balance between the employment of technology of machine-made perfection and a continuing respect for the tradition of using human skills, with all the possibility of imperfection, in the making of many kinds of goods. And Japanese consumers continue to support them and to honor the “living national treasures” with an unyielding sense of pride.

Industrialism is the religion with “the machine” as the god going to answer all the prayers. Communism and capitalism were just competing sects.

—Dora Russell, 1983Japan introduced the bullet train, the Shinkansen, in 1964, some 134 years after William Huskisson became an unfortunate casualty of the introduction of what was at the time a new kind of technology. No accident attended its first journeys, nor in any of the journeys in the years and decades since. These days the Tokaido line, running between Tokyo and Osaka, sends ultra-high-speed trains in each direction every six minutes on average, 130,000 of them each year. Four hundred and twenty-five thousand passengers are carried every day along the three-hundred-mile route, at speeds of up to 180 miles per hour. The average delay is just twenty-four seconds. Not a single person has ever been killed on the line.

The passengers who gaze out at the rice paddies and temple gates and hand-fashioned wooden structures that flash by do so in serenity and with a certainty that at least this one aspect of advancement is both safe and beautiful—and occupies a place in the spectrum of Japanese society that is no less important, and, crucially, no more important, than all the others that make the country uniquely worthy of remark. Technology has its place, and knows it. Which is perhaps just as it ought to be.

If only this were truly so. As Daniel Susskind so dismayingly notes, our computational muscle has now reached a point where we can create machines—devices variously mechanical, electronic, or atomic—that can think for themselves. And outthink us, to boot. And write coherent editorials in the Guardian. Which is why, though our trains may run safely and our clocks keep good time and our houses may remain at equable temperatures, and though not all is lost to science since in certain places our ability to perform archaic crafts remains intact, still there is an ominous note sounding not too far offstage. A new kind of algorithmic intelligence is teaching itself the miracle of Immaculate Conception. And with it comes an unsettling prospect of which one can only say: The future is a foreign country. They will do things differently there.