There is no female mind. The brain is not an organ of sex. As well speak of a female liver.

—Charlotte Perkins Gilman, 1898

Whalers (detail), by J.M.W. Turner, c. 1845. The Metropolitan Museum of Art, Catharine Lorillard Wolfe Collection, Wolfe Fund, 1896.

Audio brought to you by Curio, a Lapham’s Quarterly partner

One night, before going to sleep, my five-year-old son whispered in my ear, “Do you see that I am thinking?” Yes, I said, as I kissed him good night. But I knew his question expressed his awareness that I couldn’t see his thoughts, which must be communicated out loud to be evident. Not only was he having a thought I couldn’t see, he was also thinking about the thought he was having. He was aware of its ineluctable privacy and was communicating this awareness to me. This “metacognitive” ability to think and communicate complex thoughts, and thoughts about our thoughts, while being aware we are doing so, is uniquely human. In the field of cognitive psychology, the awareness that other people have different minds has been called theory of mind. We acquire this ability over the first five years of life. My son’s question manifested this awareness.

The fantasy of making the contents of the mind visible—to peek beneath the cranium—is as ancient as art, religion, or magic. It may even be an aspect of falling in love. We want to transcend our capabilities, and we use symbolic means to do so. Insofar as we can speak to share our thoughts, these are social as well as private: language at once enables thought and the communication of thought. But imagine actually looking into someone’s mind, as my son may briefly have wanted me to do—or into your own, as an outsider to it. What would it mean to see what is in the mind? Can one see a thought, an experience, a feeling—a state of mind? That question, too, was inherent in my son’s whisper. You may go further and ask: What and where is the mind that is asking that question? Are we not limited in our investigation by the fact that we use our own mental tools to try to understand how those tools work? How far can we go in such a venture, and where do we start?

Any state of mind may be a starting point. Take insomnia, for instance. For a few days a while ago, as I began to fall asleep, I would seize on the initial images that drag us into slumber, and kick myself awake, as if I were making myself stumble. I realized as this was happening that I was in an excessive state of self-awareness. Hypervigilance, it seems, corresponds to an activation in the brain’s insular cortex, a region also involved in processing our sense of embodied self, homeostatic regulation (the body’s internal thermostat), and various aspects of emotional processing, among other functions. We know this, or we surmise this, because brain imaging has allowed researchers to look into the brain. And I know this because I’ve read a handful of the hundreds of thousands of articles published over the past decades in the neurosciences.

The science is still new. The imaging that underlies it is even newer. But the quest for the seat of what used to be called the soul—psyche in Greek—is as ancient as medicine itself, central to its history and to the history of philosophy.

It ought to be generally known that the source of our pleasure, merriment, laughter, and amusement, as of our grief, pain, anxiety, and tears, is none other than the brain. It is specially the organ that enables us to think, see, and hear, and to distinguish the ugly and the beautiful, the bad and the good, pleasant and unpleasant. Sometimes we judge according to convention; at other times according to the perceptions of expediency. It is the brain, too, that is the seat of madness and delirium.

These lines come from On the Sacred Disease (the name given to what we today call epilepsy), which is one of the Hippocratic texts foundational to medicine in fifth-century-BC Greece. While other thinkers would locate the mind elsewhere in the body, sometimes even throughout it, this was the first of many attempts to articulate how (to use terms from the modern philosophy of mind) mental states depend on brain states—something that would remain mysterious for millennia, and may or may not remain mysterious forever.

From the beginning, doctors and philosophers engaged in parallel inquiries. Plato concurred with the Hippocratic belief that thought was produced by the rational soul in the brain. But he assigned emotion to the heart, which housed the spirited soul. Beastly appetites, according to Plato, were produced by the appetitive soul in the liver. Aristotle also divided the soul into three but believed the brain served to cool the blood, and that the part of the soul that synthesized and made sense of emotions and sensory input resided in the heart. Alexandrian anatomists in third-century-BC Hellenistic Egypt started drawing a more precise picture of the brain, taking note of the cerebellum, for instance, and differentiating between sensory and motor nerves. The influential second-century physician Galen showed that one could render an animal immobile—“without sensation and without voluntary movement”—by wounding a cerebral ventricle. He synthesized Alexandrian anatomy with Plato and with Aristotle’s idea of a tripartite, hierarchical soul.

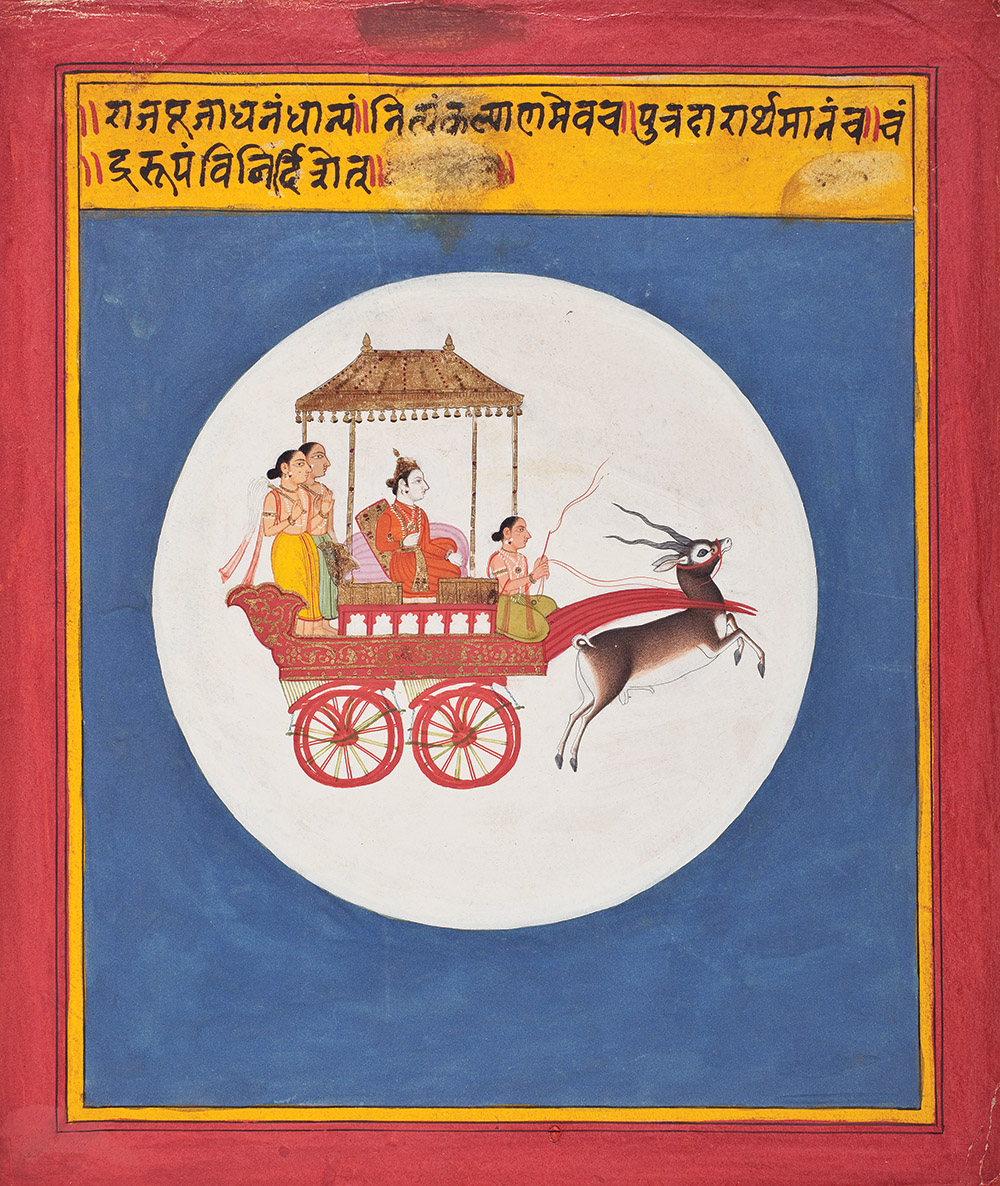

Chandra, the Moon God, folio from an Indian dream book, c. 1715. © The Los Angeles County Museum of Art, Gift of Paul F. Walter.

Over the early medieval period, there also developed a “three cell” theory, whereby each brain ventricle respectively housed imagination, cognition, and memory. This developed into a more refined “ventricular” theory, which had the sensus communis (common sense), fantasy, and imagination in the anterior ventricle; memory in the posterior ventricle; and thought and judgment in between. The divisions varied, but globally they constituted a theory of knowledge and explained how the five “external” senses transmitted information to the sensus communis, the “internal sense” that processed percepts and sensations into thoughts and produced moral and emotional judgments, all working their way into and out of memory. A great deal of metaphysics went into discussions of this “common sense,” notably by Thomas Aquinas, who understood it as an essential feature of self-awareness—perhaps, one might say, of the very “metacognitive” ability that allows us to investigate our own structures.

Yet it was always the case for philosophers that thinking about the nature of thought was dizzying, and that the mind could not, indeed should not, see itself. As Saint Augustine wrote of memory in his Confessions, “Who can plumb its depths? And yet it is a faculty of my soul. Although it is part of my nature, I cannot understand all that I am.” That question is still relevant. I cannot understand all that I am resonates within our modernity. Philosophical questions regarding how far one can look into the mind remain wide open. They also inform what it means to look into the brain.

The brain started coming into focus about five hundred years ago. It was an anatomical revolution that began in Italy and the Lowlands, with the likes of the anatomist Vesalius, who was able to revise central dicta of Galen thanks to the practice of human dissection, which had been forbidden in Galen’s day. The inauguration of neurological research was one aspect of the scientific revolution that, beginning in the seventeenth century, established the bases of modern science in Europe. The drawings of the brain by architect Christopher Wren in Cerebri anatome by Oxford physician Thomas Willis, who coined the term neurologie, were an extraordinary accomplishment resulting from a technological innovation: the preservation of brains in alcohol. Until then a dead brain was a disintegrating gelatinous mass that yielded few secrets about its physiology.

Willis denied he was investigating the rational soul, which for him remained the province of religion. Until the eighteenth century, what we call science was termed natural philosophy, and its practitioners were preoccupied with issues of metaphysics and ethics—the soul, free will, determinism, and the nature of knowledge itself. Across the Channel, French philosopher René Descartes argued that mechanical operations alone could account for the material body’s perception, sensation, and movement, without the assistance of an immaterial soul. But he also turned the admittedly baffling question of how the material and immaterial can interact into the fulcrum of his provocative mind-body dualism. His claim that soul and body were entirely separate sparked considered response—and awaited resolution.

In psychoanalysis nothing is true except the exaggerations.

—Theodor Adorno, 1951We have, on the whole, left that dualism behind. The process of resolving Descartes’ question began once a materialist understanding of the mind became politically and ideologically acceptable. The eighteenth century saw increasingly detailed discoveries in the anatomy and physiology of humans and animals. Investigations in neuroanatomy, neurophysiology, and neuropsychology proliferated in the nineteenth century, benefiting from discoveries in chemistry. Clinical observations of patients in whom a vascular accident, say, had led to a visible deficiency started filling in the picture of how the brain worked. Paul Broca individuated in 1861 an area in the frontal part of the brain’s left hemisphere that was crucial for speech by performing autopsies on patients who had lost their capacity to speak. Here was a potent instance of how higher mental abilities were the outcome of brain activity. The region is now called Broca’s area. Many such areas were identified, mapped, and named, like so many regions on the dark side of the moon. Debates raged between those like Broca, who believed the functions were localized, and their opponents, who saw the brain as a systemic whole, reacting in part to the charlatanism of phrenology, which reached its peak in popularity during the 1820s and 1830s. But localization emerged triumphant.

Science had branched off from philosophical speculation about the mind and knowledge, which itself had turned away from natural philosophy. But once psychology became scientific, notably in 1890 with William James’ Principles of Psychology, the study of the mind started to rejoin the clinical study of the brain. The plan was now to study by scientific means perception, sensation, memory, rational thought, attention, volition, emotions, anxiety, aggression, depression, the sense of self, even consciousness. And the disciplines that constitute the neurosciences, from the molecular to the psychological level, are now engaged in this exploration—in some ways, a continuation of philosophy by empirical means.

It was a neuroanatomist and histologist rather than a psychologist who had the most important impact on neuroscience and its clinical twin, neurology. By providing the key without which we would not be where we are today, Santiago Ramon y Cajal showed that the nervous system was composed of individual neurons that “spoke” to one another across synaptic gaps. For the first time, one could see what a brain was made of, at least in part. From then on, it was a matter of understanding how neurons worked and what they did—which is no small task.

Whatever we don’t understand about brain function, we do know there are around 86 billion neurons in the brain. Each neuron has on average seven thousand synaptic connections. That makes the brain the most complex object in the universe, as it is often said. Complexity of this order is needed to produce the minds we have, though it is perhaps not sufficient for us to fathom the astronomical number of cells and connections that make up our ability to fathom anything, including a verb such as fathom, or the idea of infinity. It may be that this so-called explanatory gap is intrinsically unbridgeable, as some philosophers contend. For instance, the amount of cerebral activity concentrated in the time you spend reading this essay is mind-boggling. None of this microscopic activity is measurable by what we ordinarily experience as time—but then our experience of time cannot be reduced to what it is that enables that experience. Just as we are the sum of many microscopic particles that together produce what we experience, they are not identical to what we experience: the large does not reduce to the small. (In this philosophical respect, the same explanatory structure can be applied to rocks—they, too, are constituted of particles yet are not identical to them.)

No explanatory gap, however, marred the optimism of early cognitive psychology, born in the 1950s out of the new field of cybernetics, and the belief, upheld in the guise of “strong AI,” that one could map the human brain as a computer. To study the mind was not to study emotions, say, or the biological brain itself, but the algorithmic operations of cognition. This was in part an outgrowth of the behaviorist school that became established in the early 1930s, whose advocates denied the importance of mental states to behavior, interpreted merely as reflex-like responses to stimuli rather than as manifestations of emotionally rich intentions.

Pity (detail), by William Blake, c. 1795. © The Metropolitan Museum of Art, Gift of Mrs. Robert W. Goelet, transferred from European Paintings, 1958.

But experience is richer than observable responses to stimuli. The cognitive sciences moved on over the next decades. From the 1980s there emerged the notion of evolved mental “modules” as an explanatory principle for individual and social psychology. Cognitive and developmental psychologists elaborated experimental protocols through which to analyze human behavior, including that of babies and children, sometimes but not always according to a modular model. By the 1990s, emotions, so central to all human experience, became a topic of neuroscientific investigation. With that came a fuller appreciation of theory of mind, which my five-year-old exhibited so explicitly that night.

The disciplines merged and keep on giving rise to new ones almost as plastic and protean as the brain itself. Since the 1990s there has been an increase in the number of experiments, findings, and theories regarding the human mind, from the molecular, genetic, and cellular levels up to social psychology. Neuronal function is increasingly well understood thanks to the synergy of neurochemistry, biophysics, molecular biology, and genetics. And brain imaging has steadily gained in power.

The focus in the 1990s on the biology of the brain at the expense of its context—culture, language, socioeconomic conditions, ideas, education—was partly political. It was a way of dismissing the impact of environmental conditions on mental health. But that has changed. The “enactivist” approach to cognition puts the brain back in the body and the body back in the world to account for the intrinsically relational nature of our mental life. Social psychology looks at how individuals form their beliefs and attitudes within social contexts. Epigenetics analyzes how environment and experience instantiate biological change at the cellular level.

What is the hardest task in the world? To think.

—Ralph Waldo Emerson, 1841There is no need to separate nature from nurture. A continuum prevails among the molecular, genetic, neurobiological, physiological, psychological, cultural, and historical levels of explanation. We are determined at many levels, and complex for that reason. This very text you read would be no more than a bunch of squibbles without cultural context, and without these culturally acquired and shared symbols that are letters and words. To look at the brain is also to look at the dynamically relational mind at work. Reading itself involves areas in the brain that are also involved in vision and receptive speech: it may trigger introspection, sure, but it is also profoundly entwined with our relationship to others.

Brain imaging has proved to be an invaluable tool in mapping brain structures and functions—such as reading, or theory of mind. If the neurosciences ignite the collective imagination, it is in part owed to the images of the brain that flood the media. These images give us the impression we can see our own living minds, but that is an old fantasy. Brain imaging is much more complex a tool than what appears in triumphant journalistic reports. A picture of the brain, however detailed, cannot be a picture of a state of mind, or of a subjective experience, which may be translatable only through verbal or artistic means.

Nor are images of the brain’s landscape comparable to photographs—given the complexity of its geography of sulci, ganglia, lobes, cortices, commissures, nuclei, and fissures. We have only renditions. Two kinds of imaging technologies give us these renditions: structural ones give us the static scene, functional ones the action in motion. Structural imaging appeared early on, insofar as X-rays were applied to the visualization of the brain ventricles as early as 1918. More recent methods such as CT scans—computed tomography—which also use X-rays, give us tomograms, sections that compose the image through algorithmic reconstructions. Magnetic resonance imaging uses magnetic fields and delivers higher-resolution pictures.

But it is functional imaging that has transformed neuroscientific and neuropsychological research. It registers minute metabolic changes, enabling researchers to pinpoint which parts of the brain are most saliently at work on specific tasks compared to other parts, giving a real-time picture of cerebral activity. In fact, by 1890, a number of researchers, including William James, had already correctly reported that variations in blood flow in the brain correspond to neuronal activity—a phenomenon called neurovascular coupling—and this is what functional scans make use of PET scans—positron-emission tomography—work by tracing injected radioactive substances to see how they bind to brain receptors. So do SPECT scans, single-photon emission computed tomography. Then in 1990 the fMRI—the functional version of MRI—was introduced. It is much less invasive than PET and often more precise in terms of spatial resolution, with the caveat that temporal resolution remains a problem, since cerebral activity is far faster than the machine.

Propylaea to the Acropolis, Athens, c. 1890. Photograph by Braun, Clément & Cie. © The J. Paul Getty Museum, Los Angeles. Digital image courtesy of the Getty’s Open Content Program.

Thanks to translational research—the application of basic research to the clinical realm—we are often in a position to know today what it means for something to be going wrong. Images are a research tool and never replace clinical examination. But they can help save lives, assisting in the detection of the location of strokes, vascular anomalies, tumors, or injuries. They can help identify the onset or state of neurodegenerative disease. The fMRI may also eventually help detect signs of consciousness in victims of massive stroke or brain injury who are in a “minimal state of consciousness” even if they seem to be in a “vegetative state,” that is, brain-dead.

But without an expert reader, the image is a map without a key or grid. In the realm of research, the generation of meaningful data from imaging depends on precise experimental protocols and specific questions or tasks put to members of a study cohort, and then on statistical interpretation and error correction. The activity picked up by an fMRI scan is never directly that of a thought or emotion; it is of the oxygenation level of blood, which reflects higher blood flow and hence neuronal activation. And since the whole brain perpetually devours energy, identifying such areas requires caution and an ability to weed out false positives. Moreover, most mental life involves the dynamic interaction of neural networks across areas of the brain, in feedback with the body, which complicates the interpretation of visible brain activity.

In medical care, patient history and a clinical sensitivity to an ailing person’s concerns precede the use of images. There are no two identical cases of a disease, just as there are as many different brains as there are people. While it takes phenomenal introspection to know one’s own state of mind—and while our state of mind is also our bodily state—it takes clinical attention to a patient’s body language and behavior to know what might be wrong.

Any image of a brain cannot be typical of anything unless one uses heuristics to establish probable averages that would correspond to an idea of normal function. Where there is illness, something similar applies. Once a label is affixed to a pathological condition borne out by clinical examination and imaging data, one is left with a label. It is as useful in accounting for a person’s subjective experience and history as a label in a museum is useful for telling us why an artwork is emotionally moving. Neurology is still powerless before a large number of cerebral lesions. Nor is it clear how psychotropic medications work. We know a lot, and we know very little.

Yet for those aspects of the mind that can be studied, our interpretations have become extremely refined. Take theory of mind, with which my younger son had us begin this long journey. We know it requires attention to words and gestures of others, which itself requires a proper processing of the sensorimotor inputs we perceive as our own, or interoception, and the perception of our body in space, or proprioception. It requires attention to appropriate signals.

Such a multipronged operation partakes of what one calls executive function, which is mostly (not wholly) processed within the prefrontal cortex—the front ventricle of old, which used to be associated with sensus communis. Executive function enables us to control our impulses and work through our emotions (the old appetitive and sensitive souls). Elderly patients afflicted with neurodegenerative diseases that affect executive function, such as frontotemporal dementia, or FTD, have diminished inhibitions, and may tend to scoff down food, grope strangers, steal in supermarkets. But executive dysfunction per se is not visible on imaging. When its clinical manifestation coexists with imaging that shows structural changes in specific cortical areas, then a diagnosis like FTD may apply. Imaging, when abnormal, is just one part of the diagnostic process, where the human patient is the center.

Understanding is a very dull occupation.

—Gertrude Stein, 1937And there is no structural or functional signature for such developmental syndromes as attention-deficit and autism-spectrum disorders, which are now also understood as disorders of the executive function. In the young, these cause difficulties in putting ideas together, accessing short-term memory, and filtering sensorimotor inputs. This, in turn, impedes a standard development of theory of mind, social cognition, and so on. These syndromes, which are clinical diagnoses, are based in part on neuropsychological testing. In both the young and the old, however, labels change according to theory and evidence. The syndromes are not fixed.

This is all a work in progress. Anything one will ever write about the sciences of mind is in flux. What matters is the quality of subjective experience. Maybe my insular cortex was stimulated when I experienced insomnia, but even if new research were to reveal that different areas are at play, it would not change how it feels.

Yet the investigations go on. Research into interoceptive awareness and the connections between brain and gut bring the brain back into the body. Higher computing power improves imaging technologies. Optogenetics, the use of light to trigger the activation of targeted neurons, may eventually help cure psychiatric ailments such as depression. Brain-computer interfaces—in which various digital devices communicate with the brain—can already help paraplegics, the blind, and the deaf. They could have sinister applications and augur a dystopic brave new world, like that imagined in countless science fiction scenarios. But we are the creators of our technologies as well as of our fictions. No technology can reproduce the human imagination.

And so here is my considered answer: yes, my son, I do see you are thinking. But I will never see your thoughts. You will have to tell me what they are.